Research Projects

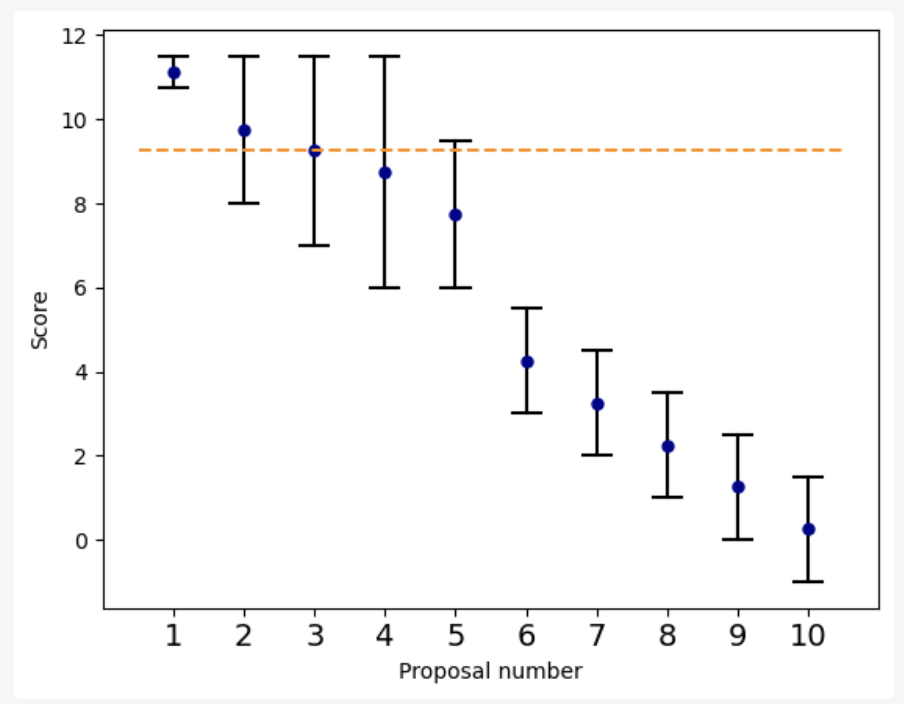

A Principled Approach to Randomized Selection under Uncertainty: Applications to Peer Review and Grant Funding

Alexander Goldberg, Giulia Fanti and Nihar B. Shah

NeurIPS, 2025 (Spotlight)We develop a principled approach to randomizing competitive selection decisions under uncertainty about the relative quality of candidates, with applications to scientific grant funding and peer review.

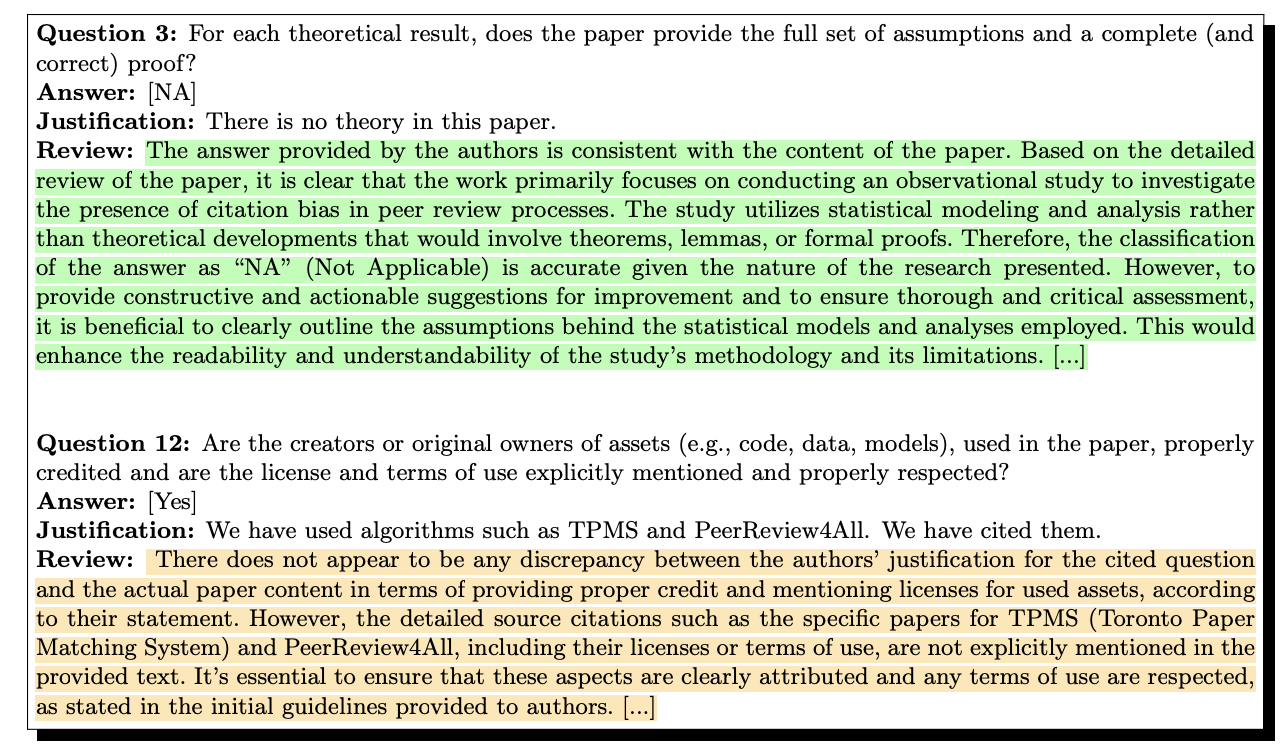

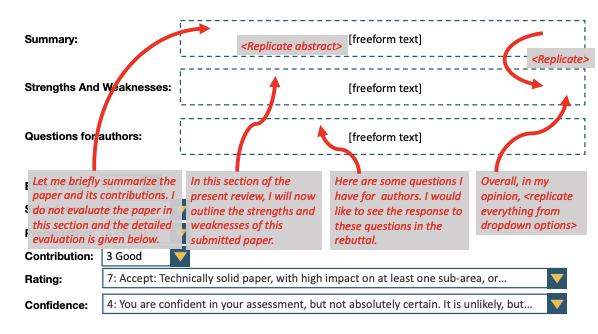

Usefulness of LLMs as an Author Checklist Assistant for Scientific Papers: NeurIPS'24 Experiment

Alexander Goldberg, Ihsan Ullah, Thanh Gia Hieu Khuong, Benedictus Kent Rachmat, Zhen Xu, Isabelle Guyon, Nihar B. Shah

arXiv, 2024 (Under submission)We evaluate the use of LLMs for checking conference submissions, by deploying a tool that checks papers against the NeurIPS author checklist.

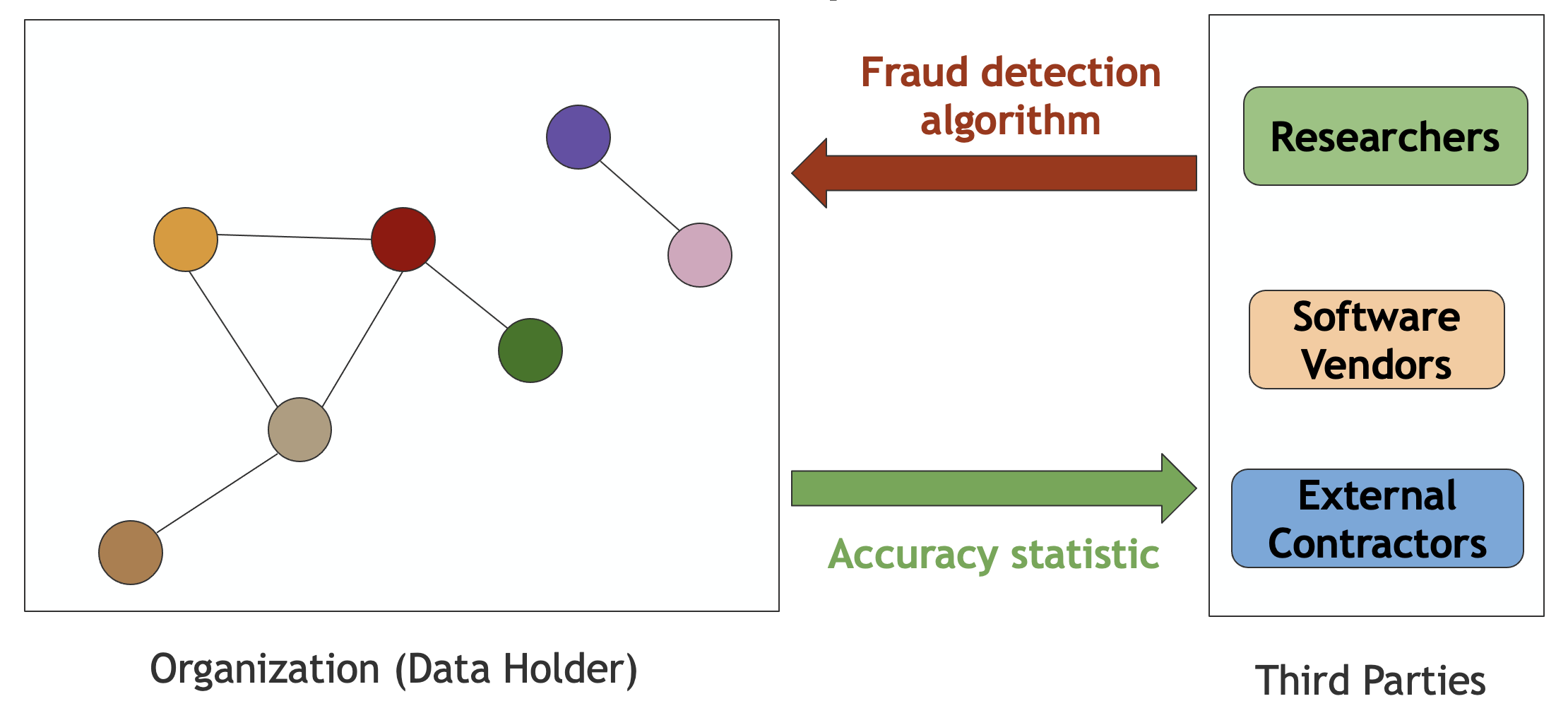

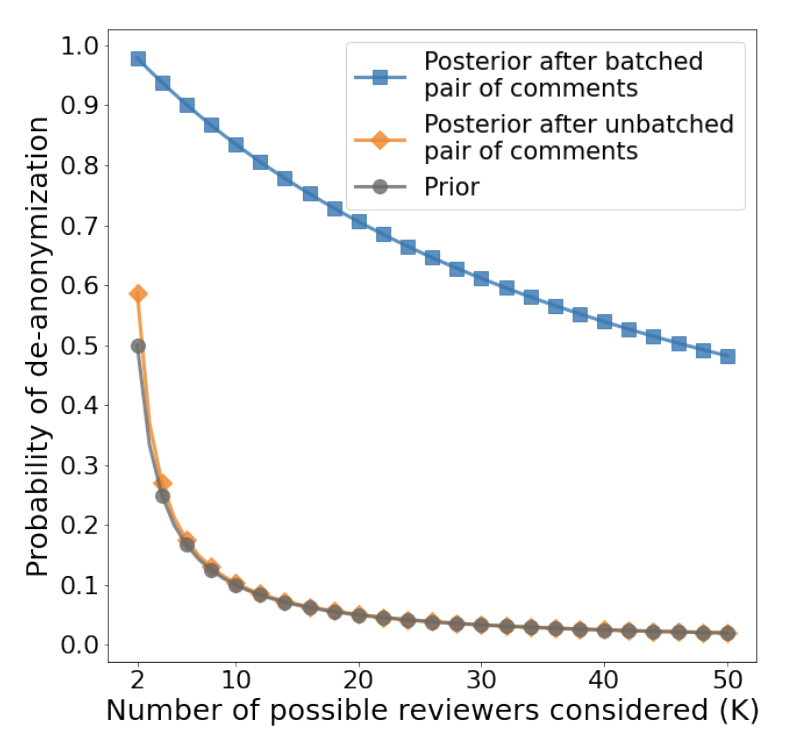

Benchmarking Fraud Detectors on Private Graph Data

Alexander Goldberg, Giulia Fanti, Nihar B. Shah, and Steven Wu

ACM KDD, 2025We study the problem of benchmarking fraud detectors on private graph data, showing that evaluation results alone can enable nearly perfect de-anonymization attacks in realistic settings. We then analyze differential privacy–based defenses and find that existing methods face a fundamental bias–variance trade-off that limits their practical utility.

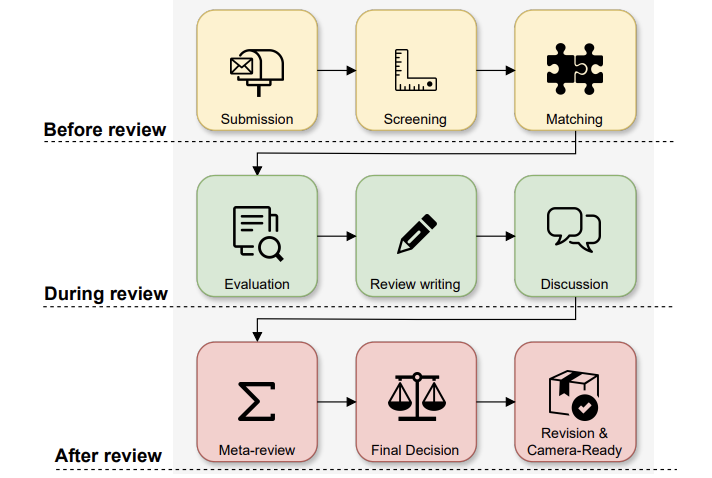

What Can Natural Language Processing Do for Peer Review?

Ilia Kuznetsov, Osama Mohammed Afzal, Koen Dercksen, Nils Dycke, Alexander Goldberg, Tom Hope, Dirk Hovy, Jonathan K. Kummerfeld, Anne Lauscher, Kevin Leyton-Brown, Sheng Lu, Mausam, Margot Mieskes, Aurélie Névéol, Danish Pruthi, Lizhen Qu, Roy Schwartz, Noah A. Smith, Thamar Solorio, Jingyan Wang, Xiaodan Zhu, Anna Rogers, Nihar Shah, Iryna Gurevych

arXiv, 2024This paper surveys the role of NLP in supporting peer review, mapping opportunities and challenges across the review pipeline from submission to revision. It highlights key obstacles, such as data access, experimentation, and ethics, and offers a community call to action, supported by an open repository of peer review datasets.

Peer Reviews of Peer Reviews: A Randomized Controlled Trial and Other Experiments

Alexander Goldberg, Ivan Stelmakh, Kyunghyun Cho, Alice Oh, Alekh Agarwal, Danielle Belgrave, Nihar B. Shah

PLOS One, 2025, Oral Presentation at International Congress on Peer Review and Scientific Publication, 2025We conduct a randomized controlled trial and other analyses examining biases and other sources of error when asking authors, reviewers, and area chairs to evaluate the quality of peer reviews. We establish evidence of length bias, wherein evaluators deem uselessly elongated reviews as higher quality, as well as positive outcome bias, wherein authors prefer positive reviews on their own papers.

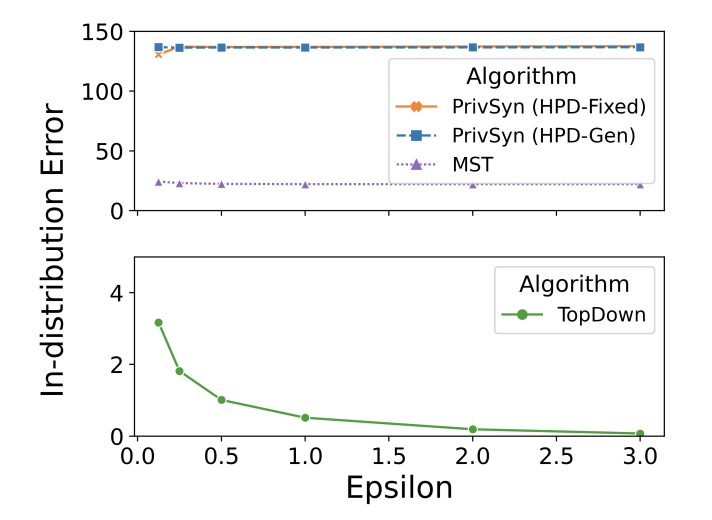

Benchmarking Private Population Data Release Mechanisms: Synthetic Data vs. TopDown

Aadyaa Maddi, Swadhin Routray, Alexander Goldberg, Giulia Fanti

PPAI Workshop @ AAAI 2024We conduct a comprehensive comparison of synthetic data generation and TopDown approaches for private population data release, evaluating their utility-privacy tradeoffs across different demographic datasets.

Batching of Tasks by Users of Pseudonymous Forums: Anonymity Compromise and Protection

Alexander Goldberg, Giulia Fanti and Nihar B. Shah

ACM SIGMETRICS, 2023We analyze how users' batching behavior on pseudonymous forums can compromise their anonymity and propose protection mechanisms to formally guarantee privacy while preventing high added latency.

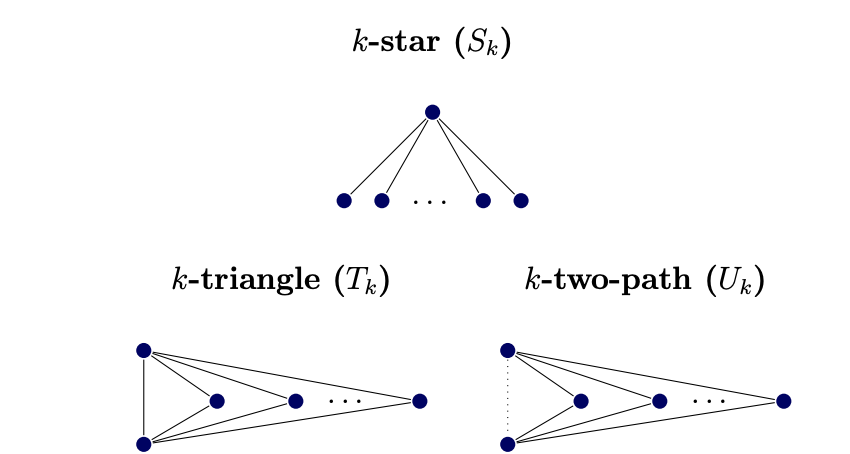

Towards Differentially Private Inference on Network Data

Alexander Goldberg (advisor: Salil Vadhan)

Undergraduate Thesis 2018, awarded the Thomas T. Hoopes PrizeThis thesis explores differentially private inference for probablistic models of random graphs, developing methods to preserve privacy while enabling statistical analysis of network data structure.